If you’re a B2B marketer, you probably read survey reports on a fairly regular basis. They have become popular marketing tools for companies that provide marketing-related services and/or technologies. Survey reports can be valuable sources of information about marketing trends, practices, and technologies, but they can also mislead. Perhaps more accurately, survey results and reports can make it easy for us to draw inaccurate, or at least unjustified, conclusions.

A survey report published this past spring provides a good vehicle for illustrating my point. For reasons that will become obvious, I’ll refer to this research as the “PA survey” and the “PA report.” I’m using this report, not because it is particularly flawed, but because it resembles many of the research reports I review.

The PA report was prepared by a well-known research firm, and the primary focus of the PA survey was to show the impact of predictive analytics on the B2B demand generation process. The PA survey was sponsored by one of the leading providers of predictive analytics technology.

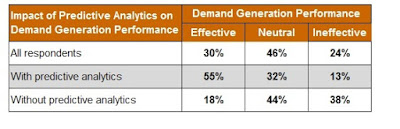

The PA survey asked participants to rate the effectiveness of their demand generation process as Effective, Neutral, or Ineffective. The PA report then provides data that shows the correlation between the use of predictive analytics and demand generation performance. The following table shows that data:

The PA report contains very strong statements regarding the impact of predictive analytics on demand generation performance. For example: “Overall, less than one-third of study participants report having a B2B demand generation process that meets objectives well. However, when predictive analytics are applied, process performance soars, effectively meeting the objectives set for it over half of the time.” (Emphasis in original)

The PA report doesn’t explicitly state that predictive analytics was the sole cause or the primary cause of the improved demand generation performance, but it comes very close. The issue is: Do the results of the PA survey support such a conclusion?

One of the fundamental principles of data analysis is that correlation does not imply causation. In other words, data may show that two events or conditions are statistically correlated, but this alone doesn’t prove that one of the events or conditions caused the other. Many survey reports devote a great deal of space to describing correlations, but most fail to remind us that correlation doesn’t necessarily mean causation.

The following chart illustrates why this principle matters. The chart shows that from 2000 through 2009, there was a strong correlation (r = 0.992558) between the divorce rate in Maine and the per capita consumption of margarine in the United States. (Note: To see this and other examples of hilarious correlations, take a look at the Spurious Correlations website by Tyler Vigen.)

I doubt that any of us would argue that there’s a causal relationship between the rate of divorces in Maine and the consumption of margarine, despite the high correlation. These two “variables” just don’t have a common-sense relationship.

But when there is a plausible, common-sense relationship between two events or conditions that are also highly correlated statistically, we humans have a strong tendency to infer that one event or condition caused the other. Unfortunately, this human tendency can lead us to see a cause-and-effect relationship in cases where none actually exists.

Many marketing-related surveys are narrowly focused, and this can also entice us to draw erroneous conclusions. The results of the PA survey do show that there was a correlation between the use of predictive analytics and the effectiveness of the demand generation process among the survey participants. But what other factors may have contributed to the demand generation effectiveness experienced by this group of survey respondents, and how important were those other factors compared to the use of predictive analytics?

For example, the PA report doesn’t indicate whether survey participants were asked about the size of their demand generation budget, or the number of demand generation programs they run in a typical year, or the use of personalization in their demand generation efforts. If this data were available, we might well find that all of these factors are also correlated with demand generation performance.

There are a couple of important lessons here. First, whenever a survey report states or implies that improved marketing performance of some kind is correlated with the use of a particular marketing practice or technology, you should remind yourself that correlation doesn’t indicate causation. And second, it’s critical to remember that the performance of any major aspect of marketing is very likely to be caused by several factors, not just those addressed in any one survey report.

One final comment. Despite what I have written in this post, I actually believe that predictive analytics can improve B2B demand generation performance. What we don’t have yet, however, is reliable and compelling evidence regarding how much improvement predictive analytics will produce, and what other practices or technologies may be needed to produce that improvement.

Top image courtesy of Paul Mison via Flickr CC.

Of course, this isn’t a post about predictive analytics. It’s a post about what is real and effective in research analysis, reported findings and conclusions, and what is recommended by the analyst or research contractor. Key point made: “One of the fundamental principles of data analysis is that correlation does not imply causation. In other words, data may show that two events or conditions are statistically correlated, but this alone doesn’t prove that one of the events or conditions caused the other. Many survey reports devote a great deal of space to describing correlations, but most fail to remind us that correlation doesn’t necessarily mean causation.”

As a stakeholder experience research and training consultant, I’ve been offering this caveat for years, and have frequently written about it. Too often, analysts and vendors elect the easier, more direct, and frequently misleading, path of choosing correlation over causation. That’s one of the reasons why, for example, in evaluating customer experience and resulting downstream consumer behavior there is an overfocus on the tangible, rational and functional components of value and an underfocus on the subconscious, emotional, and memorable elements of value.

Thanks for the important reminder.

Regarding false conclusions, part of the problem lies in the human tendency to suspend skepticism. Big Data has only enhanced the problem. We are hungry for statistical fact. We readily believe that numbers don’t lie, and that algorithms are inherently objective. Both are dangerously wrong. When it comes to predictive analytics, I have yet to see or hear a researcher say, ‘the model I’ve developed isn’t that great.’ That alone makes me skeptical.

My article, The Hundredth Monkey Effect, provides other examples. Also, I recommend Michael Shermer’s book, Why People Believe Weird Things, which includes a fabulous essay on pseudo-science.

It seems to me it should be pretty easy to figure out if predictive analytics are effective in any context by employing an experimental or quasi-experimental design on actual results. Asking people what they “think” is kind of silly when you consider all the tools for demand gen should be directly measurable – open rates, inquiries, and conversions. Perhaps I am a bit old fashion…but why ask someone what they think when you can see what actually happened!