Two noted economists, Kenneth Rogoff and Carmen Reinhardt, recently had their findings on country debt to GDP ratios questioned, as it was discovered an Excel spreadsheet error led to some grave miscalculations. And while plenty of financial bloggers and economists took the opportunity to gloat over Rogoff and Reinhardt’s misfortune, there is a larger point here: just because mathematical calculations are wrong, it doesn’t mean a particular idea isn’t directionally sound.

In 2010, Rogoff and Reinhardt published a paper on the link between high public debt and slower economic growth. Their findings showed that when a country reached a debt level of greater than 90% of GDP, that country’s growth would slow to a crawl. This paper was subsequently used as the empirical basis for fiscal austerity—or belt tightening—for many European countries.

However, since the publishing of Rogoff and Reinhardt’s 2010 paper, their findings have been under intense scrutiny. Facing pressure to release their methodology and data, Rogoff and Reinhardt finally let other statisticians examine the study’s underlying calculations.

When Rogoff and Reinhardt’s Excel spreadsheets were released, a pair of graduate students discovered some coding errors. One key error omitted five countries from the calculations, which changed the mean of negative 0.1% economic growth to a positive 2.2%, a pretty significant switch! In other words, the conclusion that the “magic number of 90% debt to GDP equates to slow growth” wasn’t so magical after all.

Predictably, mainstream economists like Paul Krugman were quick to pounce. In his column, “Holy Coding Error, Batman“, Krugman called the error “embarrassing”, a “failure” and concluded it was reason enough to discount the underlying message that countries with higher debt could see slower growth in the future.

Krugman’s gloating aside, we should note that just because calculations supporting a particular idea are wrong, it doesn’t necessarily mean the proverbial “baby” should be tossed out with the “bathwater”.

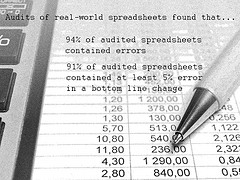

Here’s why: an article on WSJ‘s Market Watch cites a few studies showing 88% of spreadsheets contain errors of some kind. Ray Panko, a professor of IT management at University of Hawaii says that spreadsheet “errors are pandemic”.

Now whether you believe the 88% number is correct, or even if you discount it by half—as a consultant friend of mine suggests—it’s still a whopper of a number!

Going forward, with the knowledge a fair percentage of excel calculations are likely flawed in some manner, it makes sense that while we should expect the numbers supporting an idea need to be accurate, we should also understand that there could be errors. And because there could be calculation errors, we need to decide if the idea—outside any erroneous calculations—is a sound idea, or not.

Of course, there are instances where it’s critical to get mathematical calculations correct such as launching rockets, landing planes, engineering a building or bridge etc. But let’s also be careful not to immediately throw away an idea as “false” simply because it’s discovered someone made a correctible excel spreadsheet error.

Getting back to the Rogoff and Reinhardt commotion, this is exactly what Financial Times columnist Anders Aslund has in mind when he writes, “(While) the critique of Reinhart and Rogoff correctly identifies some technical errors in their work, one cannot read it and conclude the case for austerity is much weakened. High public debt is still a serious problem.” I would add this is especially true for countries where their debt is not denominated in their own currency.

With the realization that most spreadsheets have errors, we should check, double check, and triple check Excel calculations to ensure accuracy. Peer review of excel calculations is also a recommended approach.

But let’s also not be so quick to throw out perfectly good ideas where it’s discovered some excel miscalculations, or omissions have skewed the results. After all, a key idea may not be precisely supported by the maths, but still may be directionally correct. Or as New York Fund Manager Daniel Shuchman says, we don’t need to touch the stove to prove it’s hot.

Questions:

What are the key lessons in the Rogoff and Reinhardt debacle? Mistakes in treating correlation for causation? Sloppy coding? Applying too much historical data where conditions may have changed? Applying too little data (cherry-picking)? What say you?