I’ll never forget shoplifting class.

It was a workshop for associates at the retail chain I worked for in high school. The idea was to help us prevent shoplifting by showing us how shoplifters operated.

The class was amazing. We learned advanced techniques used by professionals, such as how to defeat alarm sensors, conceal piles of merchandise, or confuse clueless sales people.

Quite a few thefts were prevented as a result of the class.

So, in the spirit of that training, I bring this blog post to you. Many customer service professionals are willing to stoop to underhanded means to artificially boost survey scores.

This post will help you catch them.

Technique #1: Manipulate Your Sample

You can’t survey everyone, so companies survey a small portion of their customers, called the sample.

Ideally, your sample represents the thoughts and opinions of all your customers. However, you can make a few tweaks to increase the likelihood that only happy customers are surveyed.

For example, you could survey customers who complete a transaction using self-service. You’ll likely get high scores since self-service transactions are typically simple and you are only surveying people who succeeded. Customers who get frustrated and switch to another channel for live help won’t be counted in this survey.

There are other ways to get higher scores by being selective about your sample.

- Limit your survey to channels with simpler transactions, like chat.

- Limit your survey to people who have contacted you just once.

- Limit your survey to people who contact you for certain types of transactions.

Technique #2: Manually Select Respondents

Some employees can manually select survey respondents. This enables them to target happy customers while leaving out the grumpy ones.

The survey invitation at the bottom of a register receipt is an excellent example. If a customer is obviously happy, the employee can circle the invitation, write down his or her name, and politely ask the customer to complete the survey.

What if the customer is grumpy? It’s pretty easy to tear off the receipt above the survey invitation so the customer never sees it.

Look for any situation where employees have some manual control over who gets a survey. There’s the potential for an employee to by choosy about who gets surveyed and who doesn’t.

Technique #3: Survey Begging

This occurs when an employee asks a customer to give a positive score on a survey by explaining how it will directly benefit the customer, the employee, or both.

I’ve written about this scourge before, but it’s worth mentioning again here. Employees beg, plead, and even offer incentives to customers in exchange for a good score.

In one example, a retail store manager offered a 20 percent discount in exchange for a perfect 10 on a customer service survey.

In another example, a cashier stamped register receipts with the requested survey response:

Image credit: Jeff Toister

Technique #4: Fake Surveys

Anonymous survey systems are easy to game. These include pen and paper surveys or electronic surveys that aren’t tied to a specific transaction or customer record.

Unscrupulous employees have been known to enter fake surveys, complete with top ratings and glowing comments. They enlist their friends and family members to do the same.

Technique #5: Incentivize Employees

Give employees an incentive to get high survey scores and you’ll likely learn a few new tricks.

In one company, technical support was divided into Tier 1 for easy inquiries and Tier 2 for more complicated requests. Tier 1 employees quickly learned they could transfer any upset customer to the Tier 2 team to avoid having that person complete a survey about them.

The poor Tier 2 employee was now stuck with the angry customer and would be the subject of any follow-up survey.

Why would the Tier 1 employees do this? They earned a cash bonus each month if they kept their survey scores above a certain level.

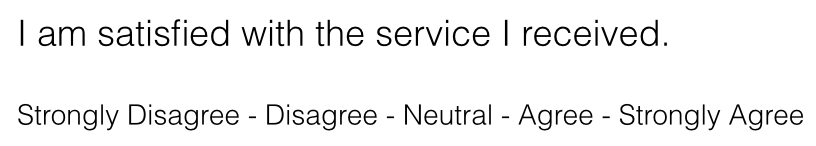

Technique #6: Write Positive Survey Questions

Survey questions can easily be slanted to elicit more positive responses. Consider these two examples.

This question is positively worded. Notice that the threshold for giving a top rating of “Strongly Agree” is pretty low; the customer merely has to be satisfied with the service they received.

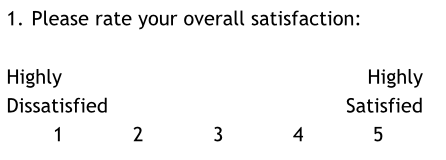

This question is neutral. It’s more likely to get a lower overall rating even though the feedback may be more accurate.

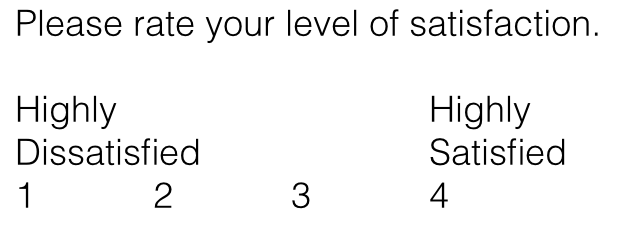

Technique #7: Use an Even Scale

There’s a long-running argument over whether customer surveys should have a odd or even point scale.

An odd-numbered scale, such as 1 – 5, provides customers with the option to provide a neutral rating.

An even numbered scale, such as 1 – 4, forces customers to choose a positive or negative overall rating.

More often than not, you’ll tip customers into a positive rating by eliminating the mid-point.

Technique #8: Change Your Scoring Process

The exact meaning of a “customer satisfaction rate” is up for interpretation. You can interpret this loosely to increase your score.

Let’s say you survey 100 customers using a scale of 1 – 5 with the following scale points and responses:

- 1 = Highly Dissatisfied (2 responses)

- 2 = Dissatisfied (6 responses)

- 3 = Neutral (7 responses)

- 4 = Satisfied (45 responses)

- 5 = Highly Satisfied (40 responses)

You could report the score as a weighted average and call it 4.15 or 83 percent. Or, you could simply add the satisfied (45) and highly satisfied (40) customers and give yourself an 85 percent rating.

Even better, combine this technique with an even-numbered scale. Those same 100 customers might respond this way:

- 1 = Highly Dissatisfied (2 responses)

- 2 = Dissatisfied (8 responses)

- 3 = Satisfied (50 responses)

- 4 = Highly Satisfied (40 responses)

Suddenly, you can boast of a 90 percent customer satisfaction rating from the same group! This little bit of trickery just boosted the score by 7 percentage points.

Technique #9: Adopt a Generous Error Procedure

Some people advocate rejecting surveys with an obvious error.

For example, let’s say a customer rates your service as a 1, the lowest score possible, and then writes:

“Hands-down the best service ever. If I could give a higher score I would. I absolutely love their service!!”

You can reasonably conclude this customer meant to give a 5, not a 1. Using that logic, the survey could be removed. Some unscrupulous people might correct the score to a 5 (the highest score possible).

This technique manipulates your scores directly, so unethical service leaders might adopt a generous error procedure.

For example, a neutral score of a 3 combined with a mildly positive comment might be kicked out as an error or even adjusted to a 4 based on the comment. These adjustments can really add up and significantly impact an overall average!

Conclusion

I want to be clear that I don’t advocate the use of any of these techniques.

The real purpose of a customer service survey is to gain actionable insight from your customers that allows you to improve service. You can’t do that if you use these methods to artificially inflate your scores.

You can learn more about sound methods for implementing a customer service survey via this training video on lynda.com.

You’ll need a lynda.com account to watch the entire course, but you can get a 10-day trial.

I’ll offer another one. Use of a ‘satisfaction’ rating scale rather than a ‘performance’ or ‘experience’ scale will almost always yield higher scores. Why? Satisfaction is attitudinal, functional, passive, and trnasactional. It’s superficial. Experience and performance represent more emotion and relationship, and as such are more rigorous measures, often yielding lower scores: https://beyondphilosophy.com/oh-anybody-still-measuring-customer-satisfaction/

It always amazes me when leaders put the foxes in charge of building the hen house and then are surprised at the results. The bigger issue is the need for research to be an independent voice and the need to protect against a group doing its own measurement.

Hi Jeff,

Never thought about it much but I have been “chosen” countless times by numbers 2 & 3. Yes, they are somewhat leading but who cares…I never take the survey. Am I wrong for doing that?

Howard – yes, the motivation is a big problem. Companies that use these tricks are usually doing measurement for the sake of measurement and not trying to gain meaningful insight.

Michael – I’m this list of survey tricks could go on and on!

Steve – I think it’s your choice as a customer. Any time a company asks you to take a survey, they’re asking you for a favor. You’re definitely not obligated.

Another issue is that research in my subject area (librarianship) indicates that a friendly librarian who gives the wrong answer is rated more highly than a gruff/non-sympathetic librarian who answers the question correctly. If this is true in general, I would draw the conclusion that this trait makes it more likely to keep friendly employees who damage the organization by giving incorrect information to customers.

Bob, you bring up a tricky situation! One issue that makes it worse is when customer service leaders just look at average scores and don’t dive into the details.

For example, one client I worked with had negative comments in 5 percent of their positive customer surveys. To your point, these customers really liked the person who served them, but still wanted to point out an issue. It took a comment analysis to spot these problems that otherwise would have been hidden.